43 confident learning estimating uncertainty in dataset labels

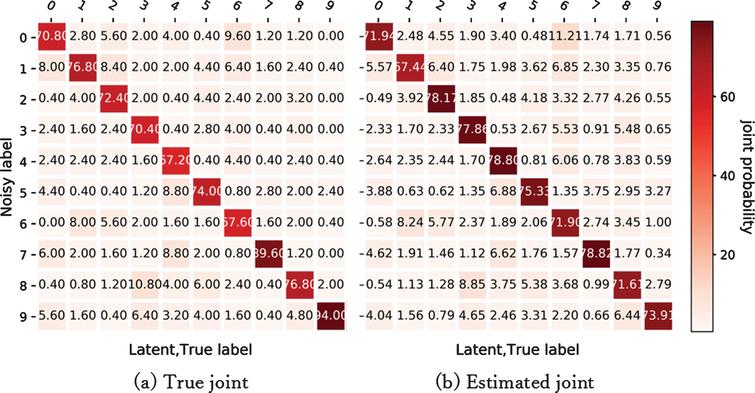

Confident Learning: Estimating Uncertainty in Dataset Labels Confident Learning: Estimating Uncertainty in Dataset Labels. Curtis G. Northcutt, Lu Jiang, Isaac L. Chuang. Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ... Confident Learning: : Estimating ... Confident Learning: Estimating Uncertainty in Dataset Labels ofthelatentnoisetransitionmatrix(Q ~yjy),thelatentpriordistributionoftruelabels(Q ), oranylatent,truelabels(y). Definition1(Sparsity). Astatistictoquantifythecharacteristicshapeofthelabelnoise defined by fraction of zeros in the off-diagonals of Q ~y;y. High sparsity quantifies non-

Abstract - arXiv Confident Learning: Estimating Uncertainty in Dataset Labels ofthelatentnoisetransitionmatrix(Q ~yjy),thelatentpriordistributionoftruelabels(Q ), oranylatent ...

Confident learning estimating uncertainty in dataset labels

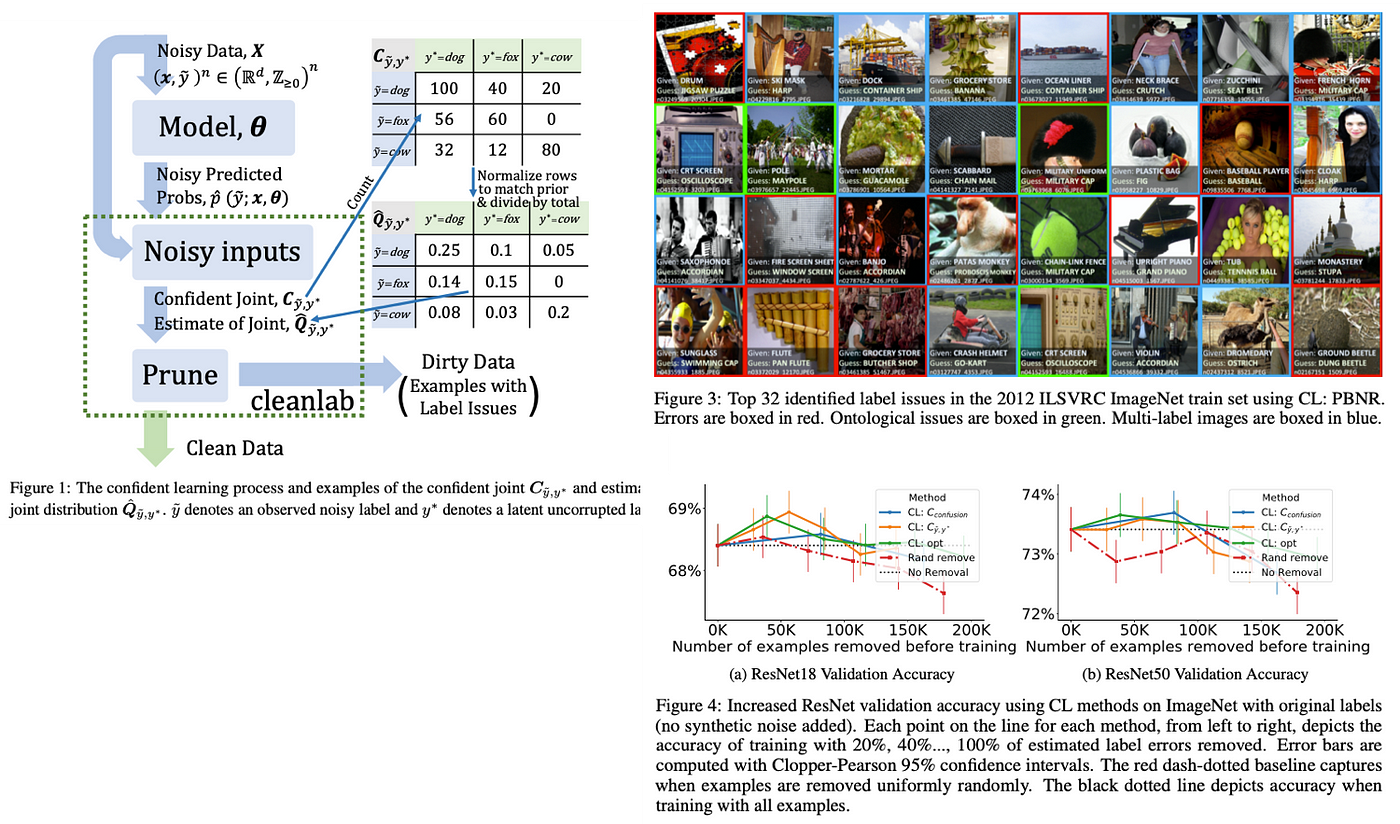

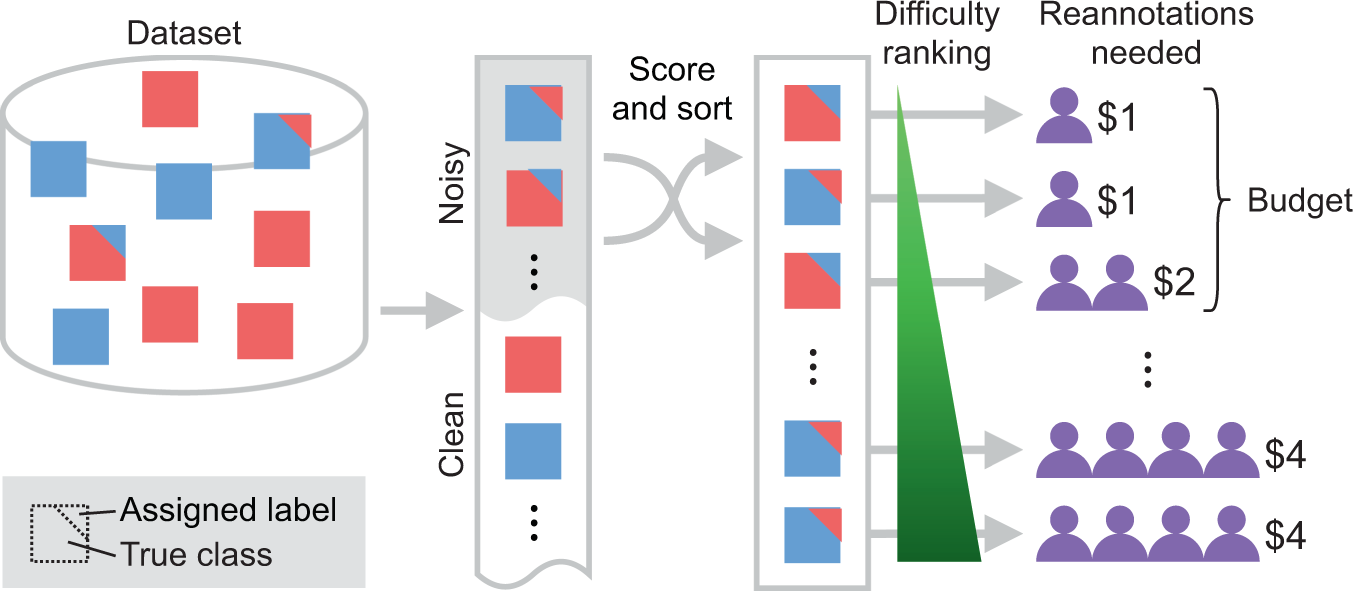

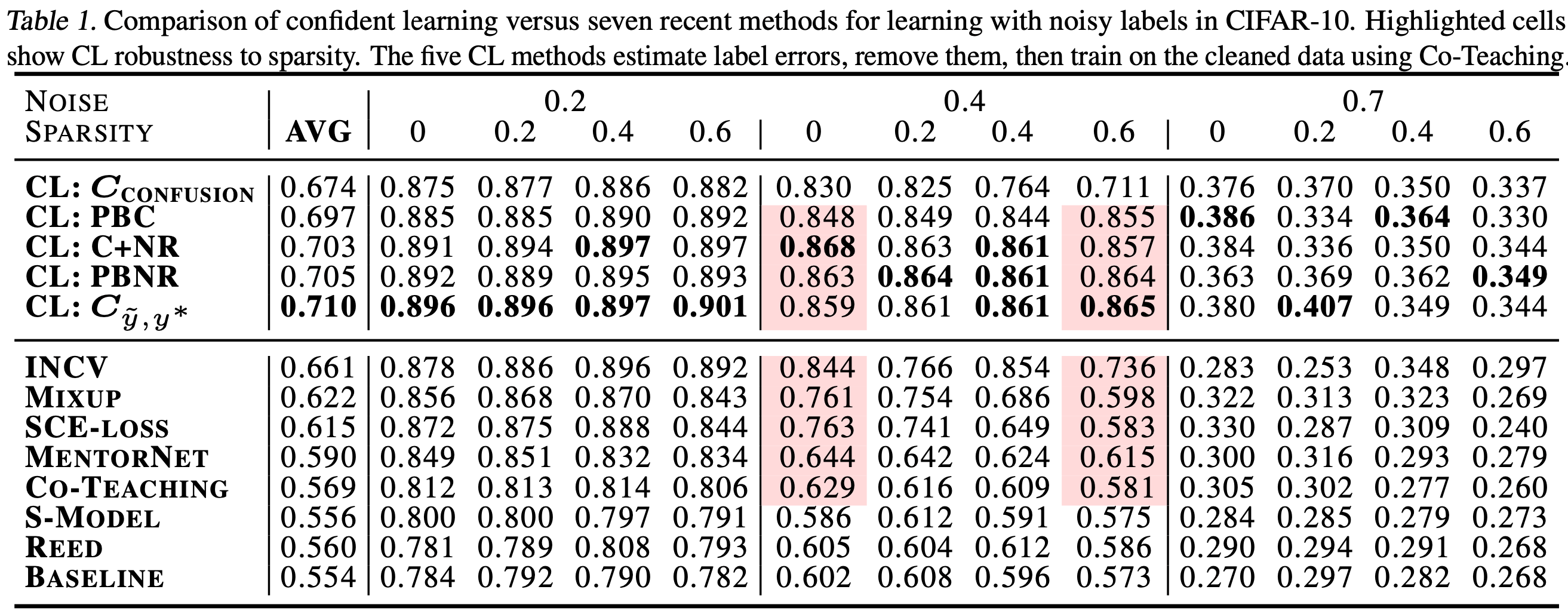

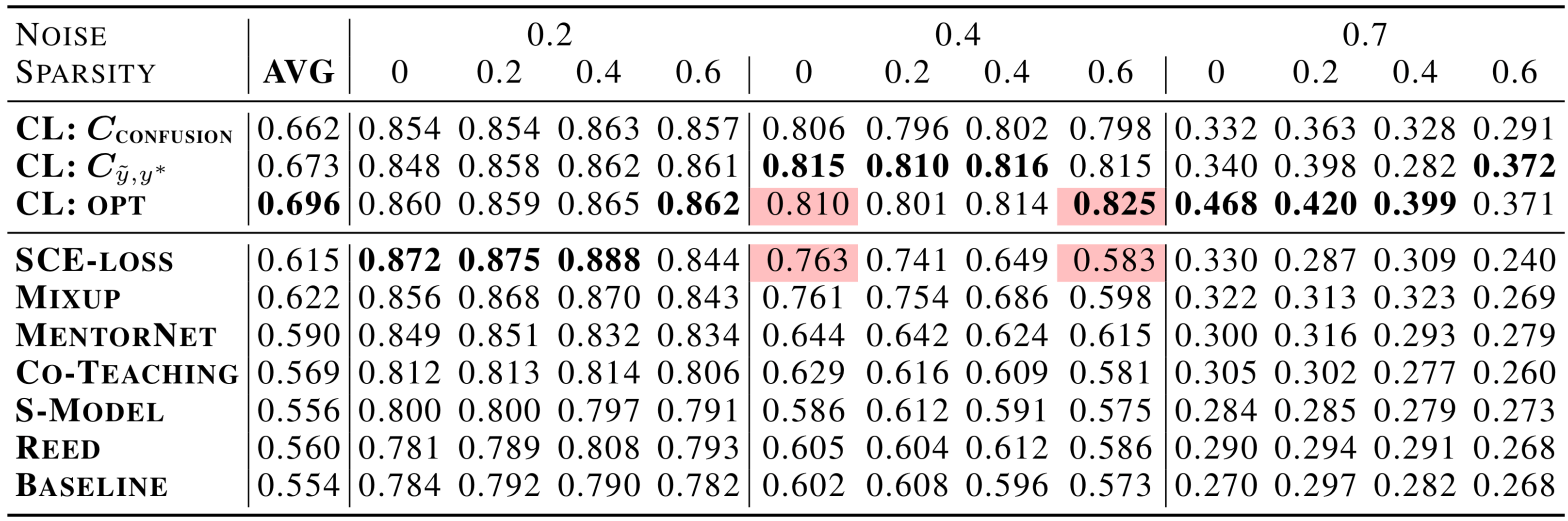

GitHub - cleanlab/cleanlab: The standard data-centric AI ... Comparison of confident learning (CL), as implemented in cleanlab, versus seven recent methods for learning with noisy labels in CIFAR-10. Highlighted cells show CL robustness to sparsity. The five CL methods estimate label issues, remove them, then train on the cleaned data using Co-Teaching. GitHub - subeeshvasu/Awesome-Learning-with-Label-Noise: A ... 2019-Arxiv - Confident Learning: Estimating Uncertainty in Dataset Labels. 2019-Arxiv - Derivative Manipulation for General Example Weighting. 2020-ICPR - Towards Robust Learning with Different Label Noise Distributions. 2020-AAAI - Reinforcement Learning with Perturbed Rewards. Confident Learning: Estimating Uncertainty in Dataset Labels Confident Learning: Estimating Uncertainty in Dataset Labels. J. Artif. Intell. Res. Learning exists in the context of data, yet notions of \emph {confidence} typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train ...

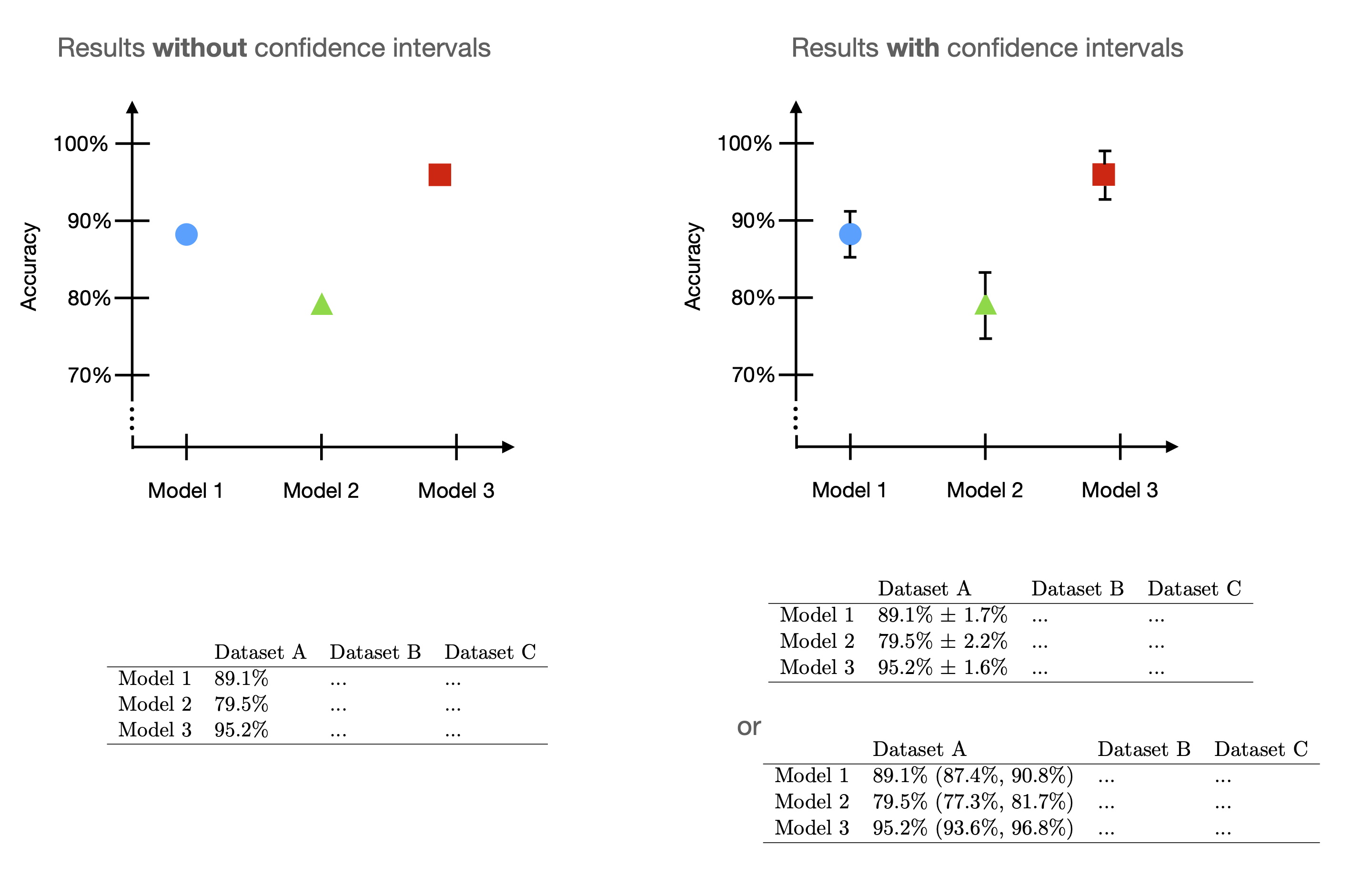

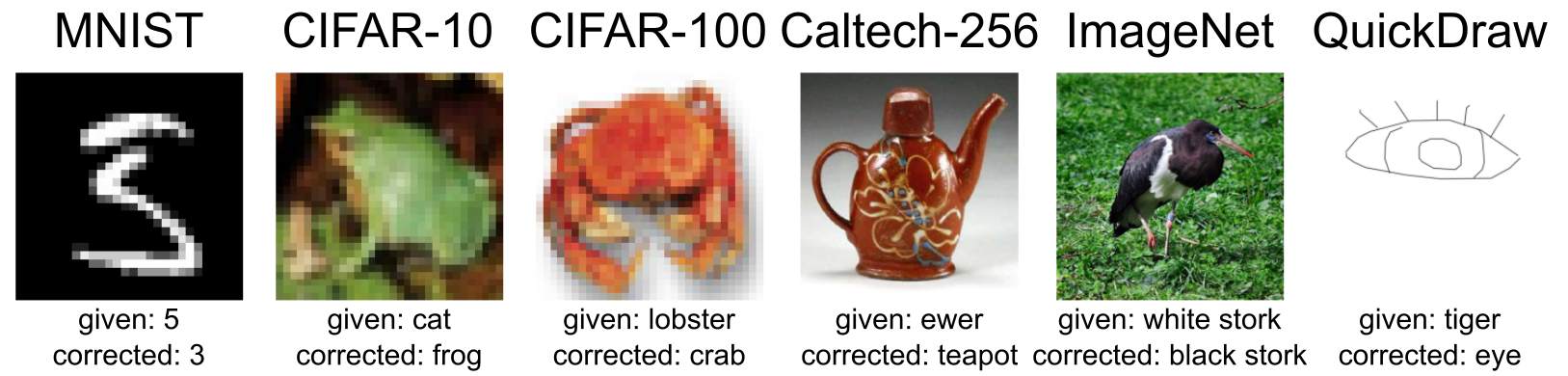

Confident learning estimating uncertainty in dataset labels. Confident Learning -そのラベルは正しいか?- - 学習する天然ニューラルネット これは何? ICML2020に投稿された Confident Learning: Estimating Uncertainty in Dataset Labels という論文が非常に面白かったので、その論文まとめを公開する。 論文 [1911.00068] Confident Learning: Estimating Uncertainty in Dataset Labels 超概要 データセットにラベルが間違ったものがある(noisy label)。そういうサンプルを検出 ... Machine Learning Glossary | Google Developers Jul 18, 2022 · For example, a disease dataset in which 0.0001 of examples have positive labels and 0.9999 have negative labels is a class-imbalanced problem, but a football game predictor in which 0.51 of examples label one team winning and 0.49 label the other team winning is not a class-imbalanced problem. Chipbrain Research | ChipBrain | Boston Confident Learning: Estimating Uncertainty in Dataset Labels By Curtis Northcutt, Lu Jiang, Isaac Chuang ... Errors in test sets are numerous and widespread: we estimate an average of 3.4% errors across the 10 datasets, where for example 2916 label errors comprise 6% of the ImageNet validation set. Putative label errors are identified using ... PDF Confident Learning: Estimating Uncertainty in Dataset Labels - ResearchGate Confident Learning: Estimating Uncertainty in Dataset Labels Curtis G. Northcutt 1Lu Jiang2 Isaac L. Chuang Abstract Learning exists in the context of data, yet no-tions of confidence typically ...

1. Introduction — Dive into Deep Learning 1.0.0-alpha1.post0 ... 1.2.1. Data¶. It might go without saying that you cannot do data science without data. We could lose hundreds of pages pondering what precisely data is, but for now, we will focus on the key properties of the datasets that we will be concerned with. Confident Learning: Estimating Uncertainty in Dataset Labels Abstract. Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. 《Confident Learning: Estimating Uncertainty in Dataset Labels》论文讲解 我们从以数据为中心的角度去考虑这个问题,得出假设:问题的关键在于 如何精确、直接去特征化 数据集中noise标签的 不确定性 。. "confident learning"这个概念被提出来解决 这个不确定性,它有两个方面比较突出。. 第一,标签噪音,仅仅依赖于潜在的真实class ... [R] Announcing Confident Learning: Finding and Learning with Label ... Title: Confident Learning: Uncertainty Estimation for Dataset Labels. Abstract: Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence.

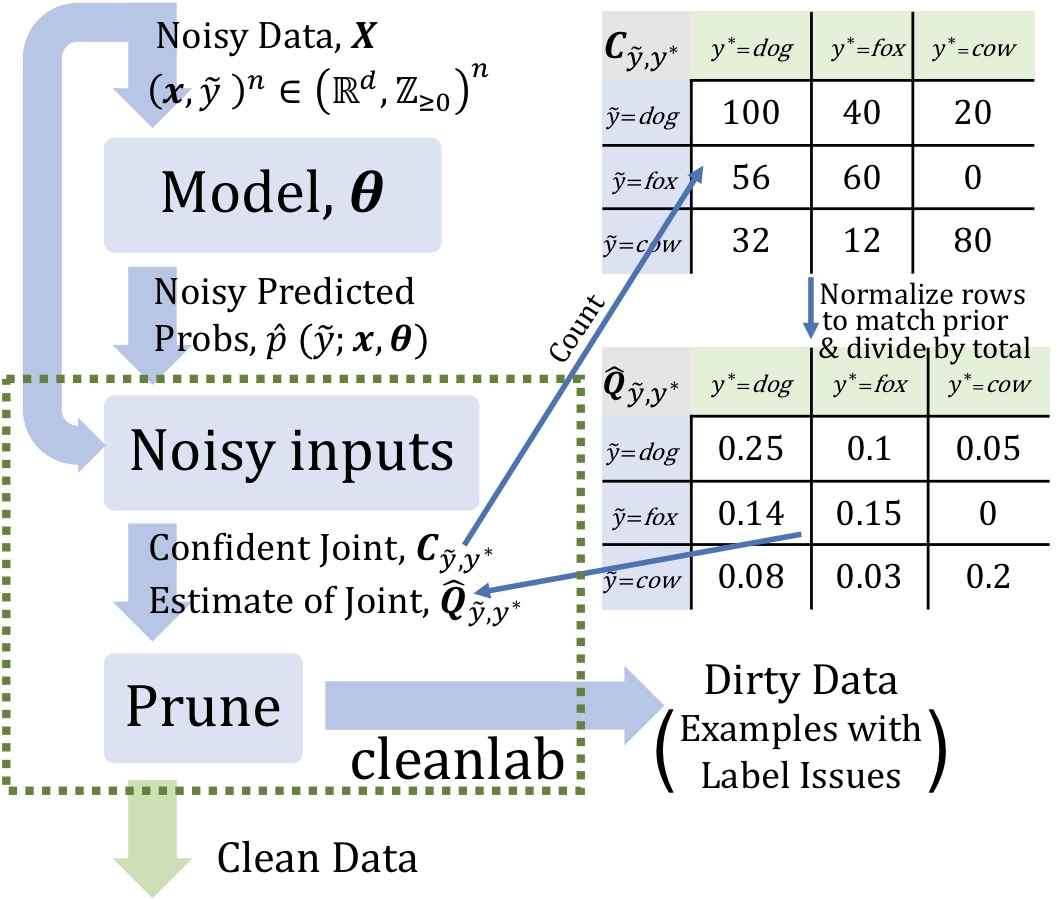

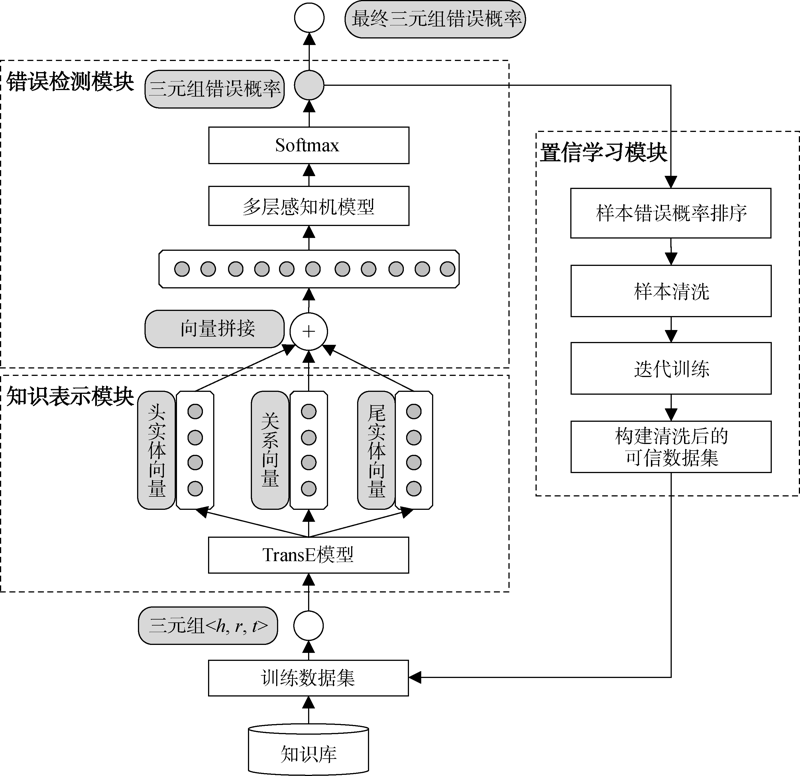

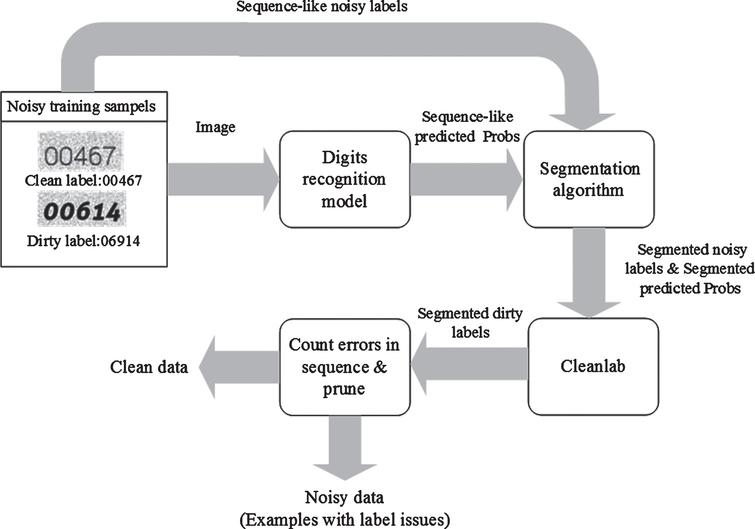

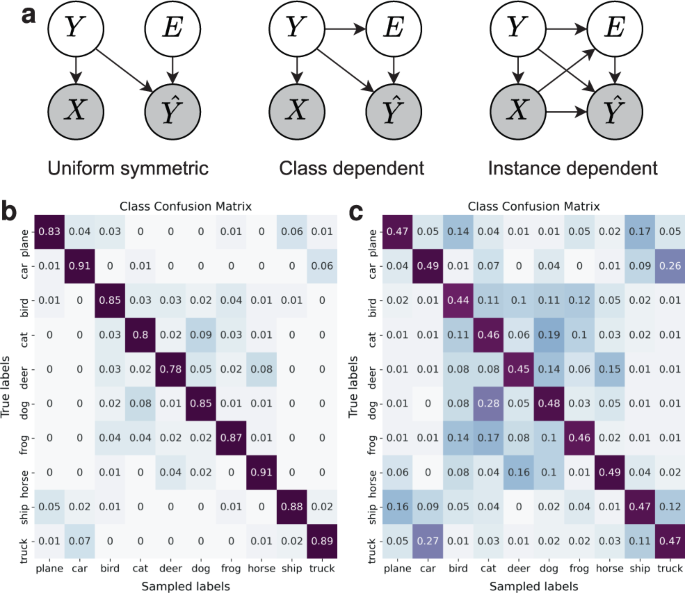

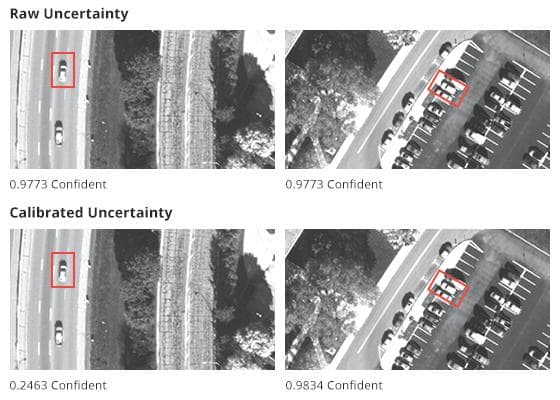

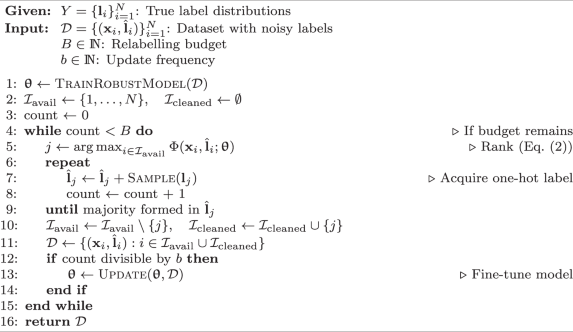

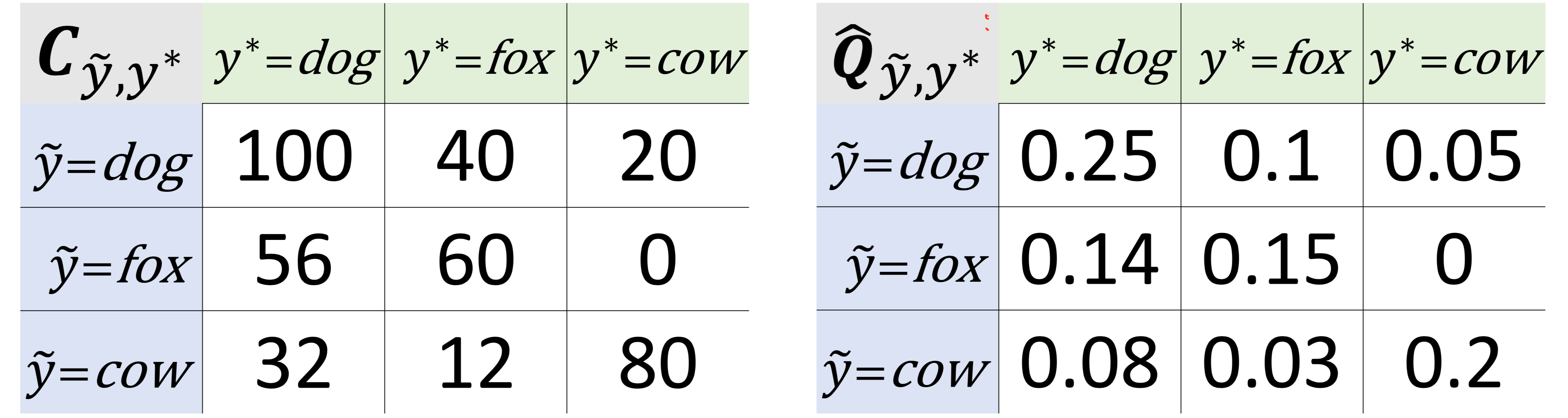

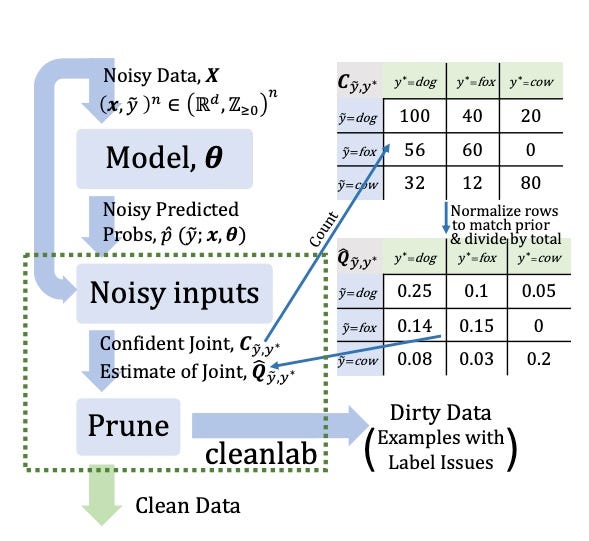

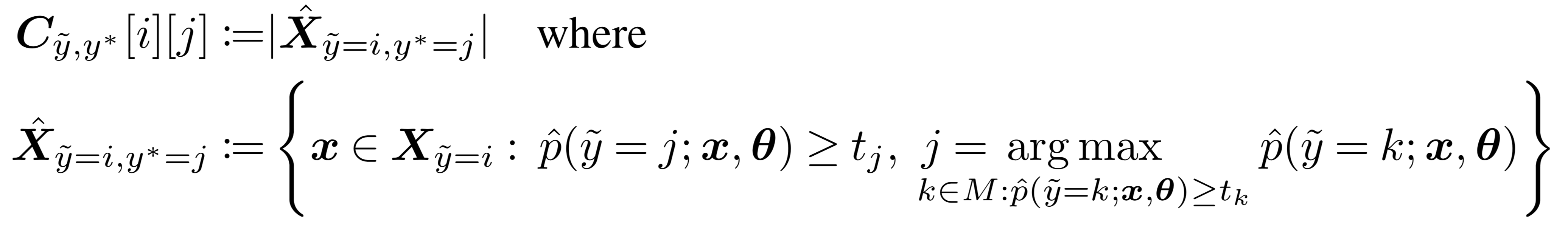

Are Label Errors Imperative? Is Confident Learning Useful? Confident learning (CL) is a class of learning where the focus is to learn well despite some noise in the dataset. This is achieved by accurately and directly characterizing the uncertainty of label noise in the data. The foundation CL depends on is that Label noise is class-conditional, depending only on the latent true class, not the data 1. Characterizing Label Errors: Confident Learning for Noisy-Labeled Image ... 2.2 The Confident Learning Module. Based on the assumption of Angluin , CL can identify the label errors in the datasets and improve the training with noisy labels by estimating the joint distribution between the noisy (observed) labels \(\tilde{y}\) and the true (latent) labels \({y^*}\). Remarkably, no hyper-parameters and few extra ... Confident Learning: Estimating Uncertainty in Dataset Labels Confident Learning: Estimating Uncertainty in Dataset Labels. Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. Data Noise and Label Noise in Machine Learning Aleatoric, epistemic and label noise can detect certain types of data and label noise [11, 12]. Reflecting the certainty of a prediction is an important asset for autonomous systems, particularly in noisy real-world scenarios. Confidence is also utilized frequently, though it requires well-calibrated models.

Confident Learning: Estimating Uncertainty in Dataset Labels Confident Learning: Estimating Uncertainty in Dataset Labels. Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence.

Tag Page | L7 This post overviews the paper Confident Learning: Estimating Uncertainty in Dataset Labels authored by Curtis G. Northcutt, Lu Jiang, and Isaac L. Chuang. machine-learning confident-learning noisy-labels deep-learning

Learning with Neighbor Consistency for Noisy Labels | DeepAI Recent advances in deep learning have relied on large, labelled datasets to train high-capacity models. However, collecting large datasets in a time- and cost-efficient manner often results in label noise. We present a method for learning from noisy labels that leverages similarities between training examples in feature space, encouraging the prediction of each example to be similar to its ...

Confident Learning: Estimating Uncertainty in Dataset Labels Abstract: Learning exists in the context of data, yet notions of \emph{confidence} typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

Confident Learning: Estimating Uncertainty in Dataset Labels - ReadkonG Page topic: "Confident Learning: Estimating Uncertainty in Dataset Labels - arXiv.org". Created by: Marcus Perez. Language: english.

Learning with noisy labels | Papers With Code Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. 3. Paper. Code.

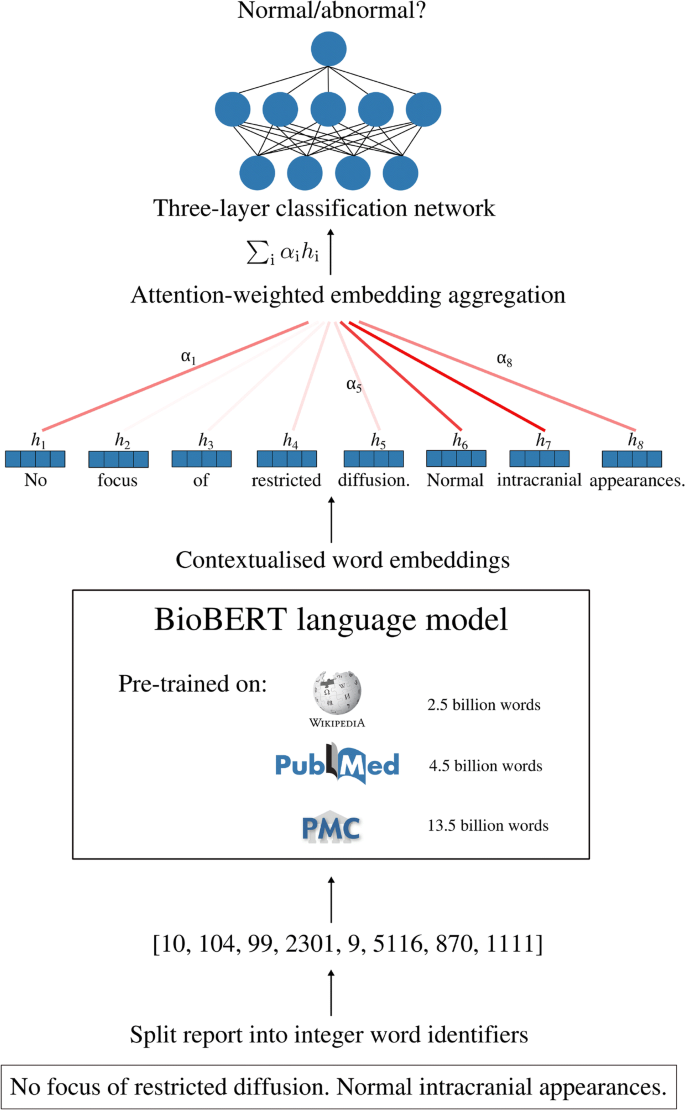

Find label issues with confident learning for NLP Estimate noisy labels We use the Python package cleanlab which leverages confident learning to find label errors in datasets and for learning with noisy labels. Its called cleanlab because it CLEAN s LAB els. cleanlab is: fast - Single-shot, non-iterative, parallelized algorithms

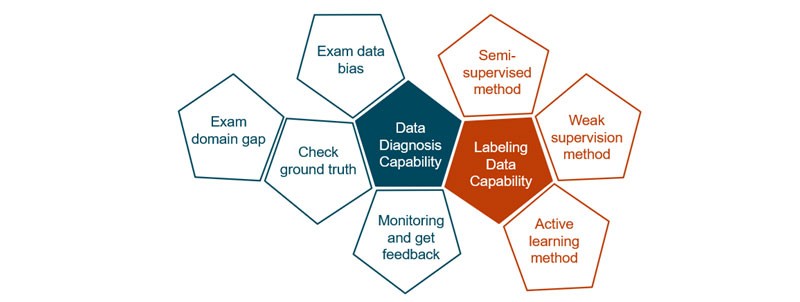

Active label-denoising algorithm based on broad learning for annotation ... However, this success is predicated on the correctly annotated datasets. Labels in large industrial datasets can be noisy and thus degrade the performance of fault diagnosis models. The emerging concept of broad learning shows the potential to address the label noise problem. ... Chuang I. Confident learning: Estimating uncertainty in dataset ...

(PDF) Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate...

Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

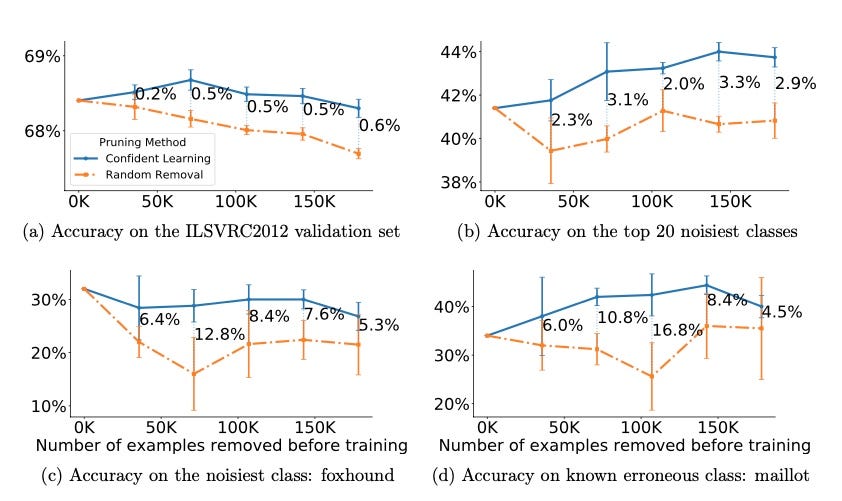

An Introduction to Confident Learning: Finding and Learning ... Our theoretical and experimental results emphasize the practical nature of confident learning, e.g. identifying numerous label issues in ImageNet and CIFAR and improving standard ResNet performance by training on a cleaned dataset. Confident learning motivates the need for further understanding of uncertainty estimation in dataset labels, methods to clean training and test sets, and approaches to identify ontological and label issues in datasets.

Confident Learning: Estimating Uncertainty in Dataset Labels Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

How to Implement Bayesian Optimization from Scratch in Python Aug 22, 2020 · In machine learning, these libraries are often used to tune the hyperparameters of algorithms. Hyperparameter tuning is a good fit for Bayesian Optimization because the evaluation function is computationally expensive (e.g. training models for each set of hyperparameters) and noisy (e.g. noise in training data and stochastic learning algorithms).

Confident Learning: Estimating Uncertainty in Dataset Labels Confident Learning: Estimating Uncertainty in Dataset Labels. J. Artif. Intell. Res. Learning exists in the context of data, yet notions of \emph {confidence} typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train ...

GitHub - subeeshvasu/Awesome-Learning-with-Label-Noise: A ... 2019-Arxiv - Confident Learning: Estimating Uncertainty in Dataset Labels. 2019-Arxiv - Derivative Manipulation for General Example Weighting. 2020-ICPR - Towards Robust Learning with Different Label Noise Distributions. 2020-AAAI - Reinforcement Learning with Perturbed Rewards.

GitHub - cleanlab/cleanlab: The standard data-centric AI ... Comparison of confident learning (CL), as implemented in cleanlab, versus seven recent methods for learning with noisy labels in CIFAR-10. Highlighted cells show CL robustness to sparsity. The five CL methods estimate label issues, remove them, then train on the cleaned data using Co-Teaching.

![Active Learning in Machine Learning [Guide & Examples]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/633a98dcd9b9793e1eebdfb6_HERO_Active%20Learning%20.png)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/29-Figure16-1.png)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/7-Figure2-1.png)

Post a Comment for "43 confident learning estimating uncertainty in dataset labels"